Insights, Inspiration, and Innovation

From beating state of the art models to capturing India's linguistic diversity. Explore the stories, science, and breakthroughs shaping the future of voice AI.

Meet ROSE: The world's first self-learning voice Model that gains experience over time, evolving with every conversation just like us.

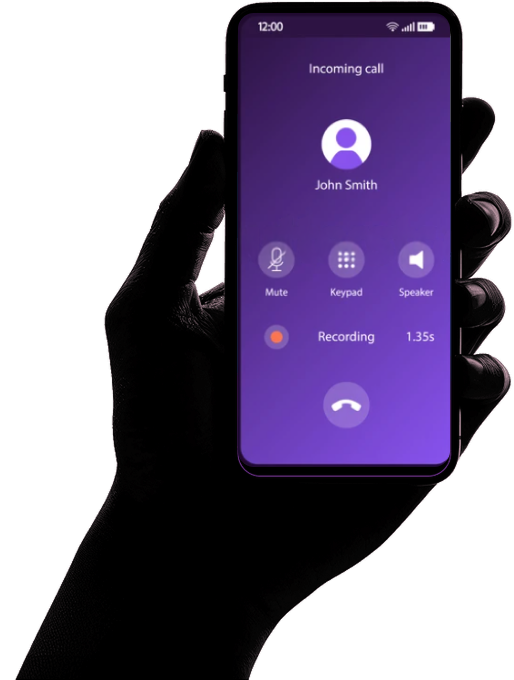

With real-time reasoning, auto language switching, and expressive voice, Rose is made for enterprise grade calling.

Fluent in 200+ languages and 25+ Indian dialects — with natural tone and emotion

Powered by Vision, a 250B parameter Multimodal AI that deeply understands and reasons.

Integrates seamlessly with your tools, Trigger workflows, fetch data, and take actions.

It's time to upgrade your stack to real-time, intelligent, speech to-speech agents that listen, think, and talk like humans.

Eliminate the complexity of chaining ASR, TTS, and logic systems. Our unified speech to speech API delivers real-time, intelligent voice interaction, all in a single call.

Unlike traditional STT bots, this model processes speech natively, decoding how people talk, not just what they say. It's voice-first intelligence for real conversations.

Craft human like voices in moments, from your own or from scratch. Deploy 50+ unique voices and launch a full AI call center with unmatched realism.

Define your agent's core behavior, personality and tone.

Link your APIs, tools, and workflows seamlessly.

Clone your voice in 30 seconds.

Test and launch instantly at scale.

Handling Objections & Questionss

Human Like Voice and Inteligence

From beating state of the art models to capturing India's linguistic diversity. Explore the stories, science, and breakthroughs shaping the future of voice AI.

ROSE is a ground‑up, foundational speech‑to‑speech model—not a fine‑tuned fork of an existing open‑source model. It's powered by our in‑house (250 billion parameters trained end‑to‑end) and offers an industry‑leading 1 million‑token context window for ultra‑long, coherent conversations. Link to Research paper: https://www.opastpublishers.com/open-access-articles/a-culturally-aware-multimodal-ai-model.pdf

Our foundational model supports a 1 million-token context window, enabling it to understand and retain long conversations, documents, or voice interactions with high consistency, memory, and depth. This makes it especially powerful for multi-turn, multimodal applications like voice agents and intelligent automation.

The typical round-trip latency from voice input to final voice output is under sub 300 milliseconds. This ultra-low latency enables real-time, human-like conversations where the agent can listen, reason, and respond naturally without awkward delays.

ROSE uses a unique experiential learning algorithm that allows it to improve with every conversation. Unlike static models, ROSE gains experience over time — learning from user interactions, adapting to preferences, and continuously refining its responses. It builds memory, recognizes recurring patterns, and evolves contextually — just like a human would.

Our average cost is ≈ $0.045/minute, tiered by volume and feature set. For custom deployments and protocol‑specific use cases, please connect with our team for a tailored quote.

Take the leap to expressive, real-time speech to speech calling. Our tech powers human-like voice agents that listen, speak, and adapt just like your best rep.

We are still fine tuning and adding new features to the product and would love your help. Join our Beta to help contribute